Epilot Datalake

Welcome to the documentation for our Data Lake feature, which serves as the centralized repository for real-time event streams of entity operations, snapshots of workflow executions, journey analytics and portal analytics data. This feature empowers users to access and analyze essential data generated by the epilot portal, including changes to entities such as orders, opportunities, contacts, accounts, products, interactions from user journeys, customer portal, installer portal and more.

Our Data Lake is seamlessly integrated with Clickhouse for data warehousing, enabling the users to leverage Business Intelligence (BI) tools and create insightful reports. This documentation will guide you through the key components of the Data Lake feature, including data schemas, usage, and credential management.

Feel free to contact our customer support or sales for help in enabling this datalake feature for your organization.

Data Schemas

Our Data Lake features two distinct datasets:

1. Entity Operations Event Streams

This dataset captures real-time event streams of entity operations.

The schema for entity operations is as follows:

{

"activity_id": "string", // ID for the individual entity operation/activity

"entity_id": "string", // ID of the entity being mutated

"org_id": "string", // Organization ID

"operation": "string", // Operation can be "createEntity," "updateEntity," or "deleteEntity"

"schema": "string", // Schema of the entity

"timestamp": "DateTime", // Timestamp of the entity mutation

"detail": "string", // Stringified JSON payload containing entity data

}

Fields of interest in this schema include:

Operation: Describes the type of entity operation, including creation, update, or deletion.

createEntity: This operation type is recorded when a new entity is created.updateEntity: This operation type is recorded when an existing entity is updated.deleteEntity: This operation type is recorded when an entity is deleted.

timestamp: Provides a timestamp for building time series reports.

activity_id and entity_id: These fields contain unique identifiers that can be used to track individual entity operations (activity_id) and identify the specific entity that was mutated (entity_id).

detail: The detail field contains a stringified JSON payload that includes the full entity data at the time of the operation. This payload can be parsed and used to access detailed information about the entity's state during the operation.

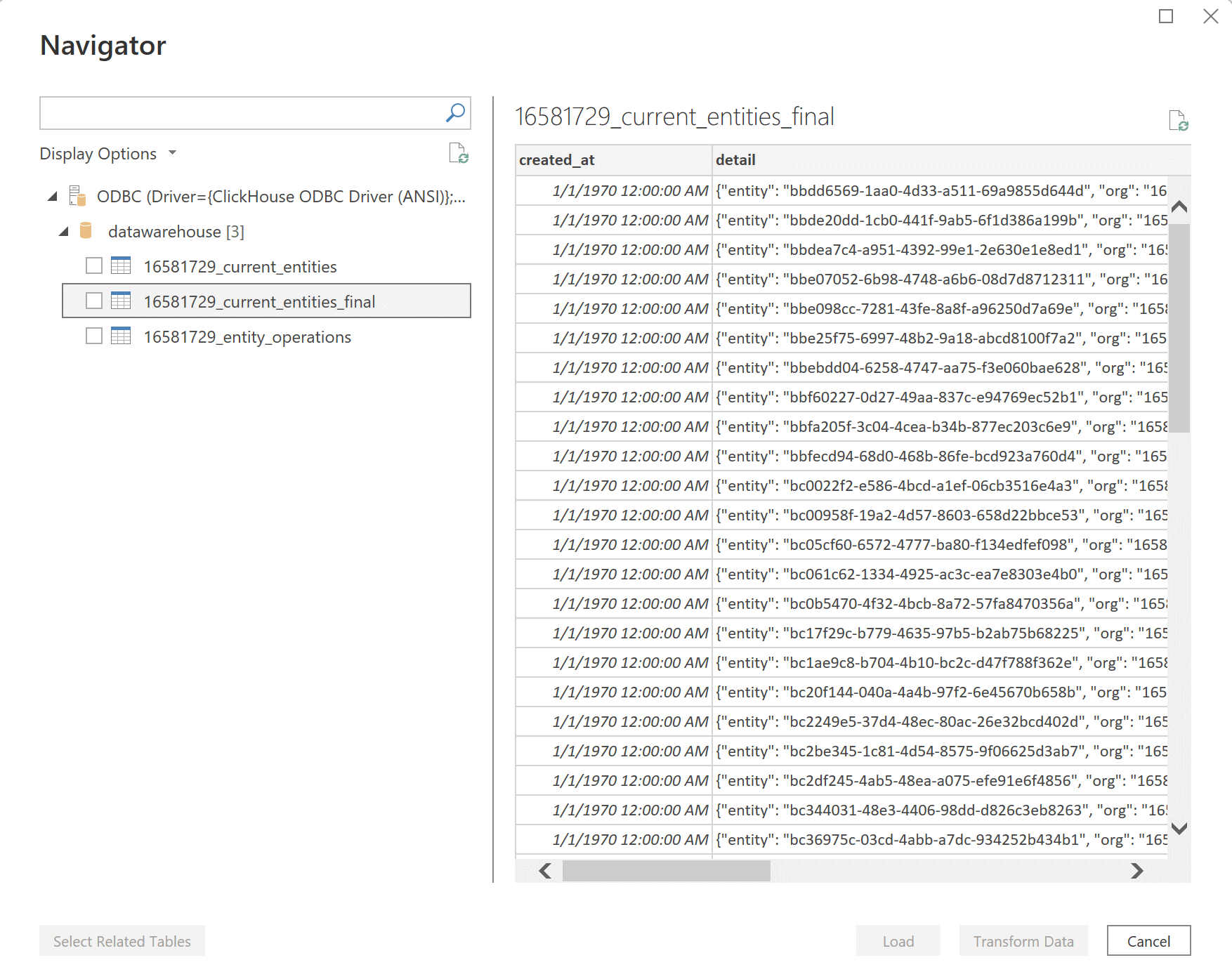

Additionally, we offer a simplified view called {org_id}_current_entities_final, which displays only the latest state of currently active entities in the organization, excluding deleted entities

2: Workflow Execution Snapshots

In addition to entity operations, we ingest real-time updates from workflow executions, allowing users to access current snapshots of workflow data. This dataset comprises five tables:

{org_id}_workflow_executions_final This table contains all the attributes of workflow execution data.

{org_id}_workflow_phases_final Here, you can find attributes of the various phases involved in a workflow execution.

{org_id}_workflow_tasks_final This table stores attributes related to individual steps or tasks within a workflow execution.

{org_id}_workflow_cancellations_final This table records data regarding the reasons for workflow execution cancellations.

{org_id}_workflow_contexts_final Contains information about related entities associated with a workflow execution.

To know more about workflow execution details, please refer here

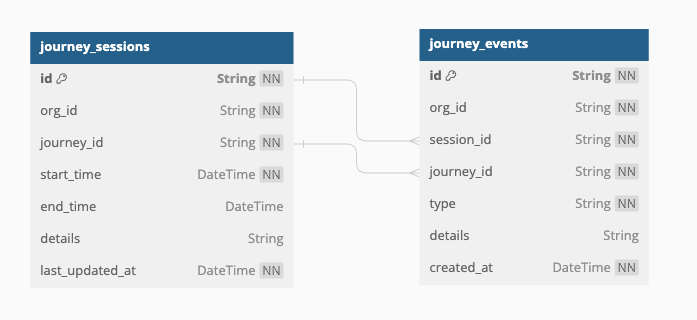

3. Journey Analytics

This dataset showcases the user sessions created for each journey and the interactions that occur within those sessions.

The schema for journey sessions is as follows:

{

"id": "string", // Unique identifier for the session (UUID)

"org_id": "string", // Unique identifier for the organization associated with the session (UUID)

"journey_id": "string", // Unique identifier for the user journey to which the session belongs (UUID)

"start_time": "datetime", // Timestamp indicating when the session started

"details": "string", // Additional details about the session, in JSON format

"end_time": "datetime", // Timestamp indicating when the session ended

"last_updated_at": "datetime", // Timestamp indicating when was the session last updated at

}

Fields of interest in this schema include:

details: This field possibly contains basic user's browser session details

deviceType: The type of device used by the user (e.g., mobile, desktop).osType: The operating system of the device (e.g., iOS, Android, Windows).browserTypeAndVersion: The browser name and version used by the user (e.g., Chrome 92, Firefox 89).screenResolution: The screen resolution of the user's device (e.g., 1920x1080).viewportSize: The size of the viewport in the browser (i.e., the visible area of the web page).colorDepth: The color depth of the user's display (e.g., 24).languagePreference: The preferred language set in the user's browser (e.g., en-US, fr-FR).ip: The IP address of the user's device when the event was recorded.embeddedIn: The website or platform on which the journey is embedded.isLauncherJourney: The journey for which the session was created is a launcher journey or not

The schema for journey events is as follows:

{

"id": "string", // Unique identifier for the event (UUID)

"org_id": "string", // Identifier of the organization associated with the event

"session_id": "string", // Unique identifier for the session in which the event occurred (UUID)

"journey_id": "string", // Unique identifier for the user journey to which the event belongs (UUID)

"type": "string", // Type of event (e.g., "step_navigation", "journey_submit", "journey_exit")

"details": "string", // Additional details about the event, typically in JSON format

"created_at": "datetime" // Timestamp indicating when the event was recorded

}

Fields of interest in this schema include:

type: This field contains the type of user interaction or event that occurs on the session

journey_load_time: The time it takes for the journey to load, typically measured from journey API response time.step_navigation: The event triggered when a user navigates from one step to another within the journey.journey_submit: The event triggered when a user completes and submits the journey.journey_exit: The event triggered when a user exits the journey, possibly by closing or refreshing the journey tab in the browser.

details: This field contains additional details about the type of event that occurs. Basically fromStepName, fromStepNumber, toStepName and toStepNumber on a step_navigation event, stepNumber and stepName on which the journey_exit occurred and time taken for journeys to load.

{org_id}_journey_sessions_final This table stores the above attributes related to journey sessions.

{org_id}_journey_events_final This table stores the above attributes related to journey events.

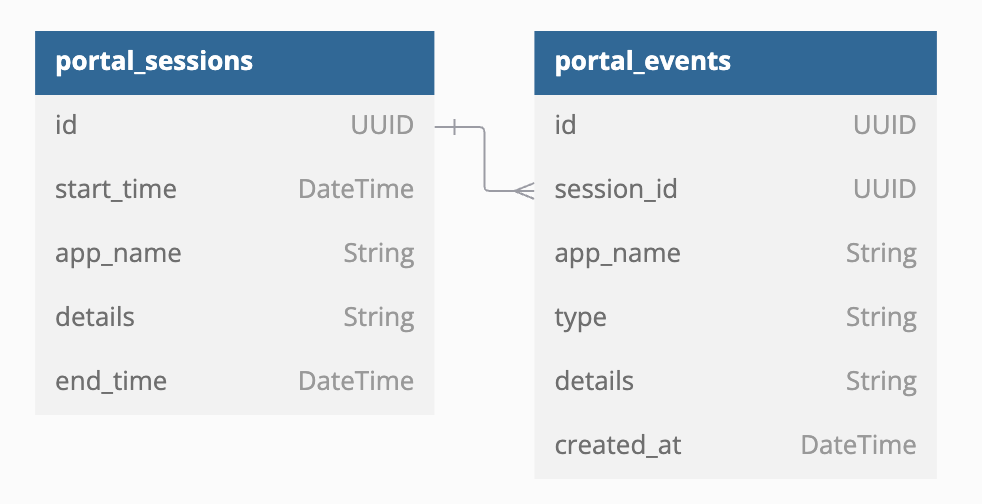

4. Portal Analytics

This dataset highlights user sessions generated during each portal login and details the interactions that occur within these sessions. It captures key metrics and events to provide insights into user behavior and engagement patterns.

The schema for portal sessions is as follows:

{

"id": "string", // Unique identifier for the session (UUID)

"org_id": "string", // Identifier of the organization associated with the event

"app_name": "string", // Name of the app for which the session was created (customer portal, installer portal etc.)

"start_time": "datetime", // Timestamp indicating when the session started

"details": "string", // Additional details about the session, in JSON format

"end_time": "datetime", // Timestamp indicating when the session ended

"last_updated_at": "datetime", // Timestamp indicating when was the session last updated at

}

Fields of interest in this schema include:

details: This field possibly contains basic user's browser session details

deviceType: The type of device used by the user (e.g., mobile, desktop).osType: The operating system of the device (e.g., iOS, Android, Windows).browserTypeAndVersion: The browser name and version used by the user (e.g., Chrome 92, Firefox 89).screenResolution: The screen resolution of the user's device (e.g., 1920x1080).viewportSize: The size of the viewport in the browser (i.e., the visible area of the web page).colorDepth: The color depth of the user's display (e.g., 24).languagePreference: The preferred language set in the user's browser (e.g., en-US, fr-FR).ip: The IP address of the user's device when the event was recorded.email: The email ID of the logged in user.domainName: The app domain in which the session was createdreferrer: The user was referred or directed from which site

The schema for portal events is as follows:

{

"id": "string", // Unique identifier for the event (UUID)

"org_id": "string", // Identifier of the organization associated with the event

"session_id": "string", // Unique identifier for the session in which the event occurred (UUID)

"app_name": "string", // Name of the app for which the event was created (customer portal, installer portal etc.)

"type": "string", // Type of event (e.g., "user_logged_in", "page_navigation", etc.)

"details": "string", // Additional details about the event, in JSON format

"created_at": "datetime" // Timestamp indicating when the event was recorded

}

Fields of interest in this schema include:

type: This field contains the type of user interaction or event that occurs on the session

user_logged_in: User successfully logged into the portal.user_registered: A new user successfully registered.add_contract_initiated: User started adding a new contract.additional_info_update_initiated: User began updating additional info (it is a wrapper term for all the additional or custom information section that an organization configures for its portal)additional_info_updated: User successfully updated additional information.additional_info_viewed: User viewed additional information details.all_documents_downloaded: User downloaded all available documents in a section.contract_added: A new contract was successfully added.contract_due_date_changed: User updated the contract’s due date.contract_payment_rate_changed: User changed the payment rate for a contract.contracts_listing_viewed: User viewed the list of all contracts.contract_viewed: User opened and viewed a specific contract.document_deleted: A document was removed by the user.document_downloaded: User downloaded a specific document.document_uploaded: A document was uploaded by the user.documents_listing_viewed: User viewed a list of uploaded documents.external_website_opened: User navigated to an external website.journey_opened: User initiated a new journey workflow.journey_closed: User exited a specific journey workflow.meter_reading_submission_initiated: User started submitting meter reading.meter_reading_submitted: A meter reading was successfully submitted.meters_listing_viewed: User viewed the list of available meters.meter_viewed: User opened details for a specific meter.meter_widget_viewed: User viewed a widget related to meters.opportunities_listing_viewed: User browsed the list of opportunities.opportunity_viewed: User opened and viewed an opportunity’s details.order_accepted: User accepted a specific order.order_refused: User declined a specific order.order_viewed: User viewed details of a particular order.orders_listing_viewed: User accessed the list of available orders.page_navigation: User navigated between application pages.password_changed: User successfully updated their account password.payments_listing_viewed: User browsed the list of all payments.product_viewed: User viewed details of a specific product.teaser_opened: User clicked and opened a teaser content.user_account_deleted: User’s account was removed or deleted.user_email_changed: User updated their email address.user_logged_out: User logged out from the application.

details: This field contains additional details about the type of event that occurs. Basically from and to page URLs for page_navigation, entity details for contracts, orders, meter readings, documents, journeys etc, external links opened, custom information updates etc.

{org_id}_portal_sessions_final This table stores the above attributes related to portal sessions.

{org_id}_portal_events_final This table stores the above attributes related to portal events.

Setting up Datalake

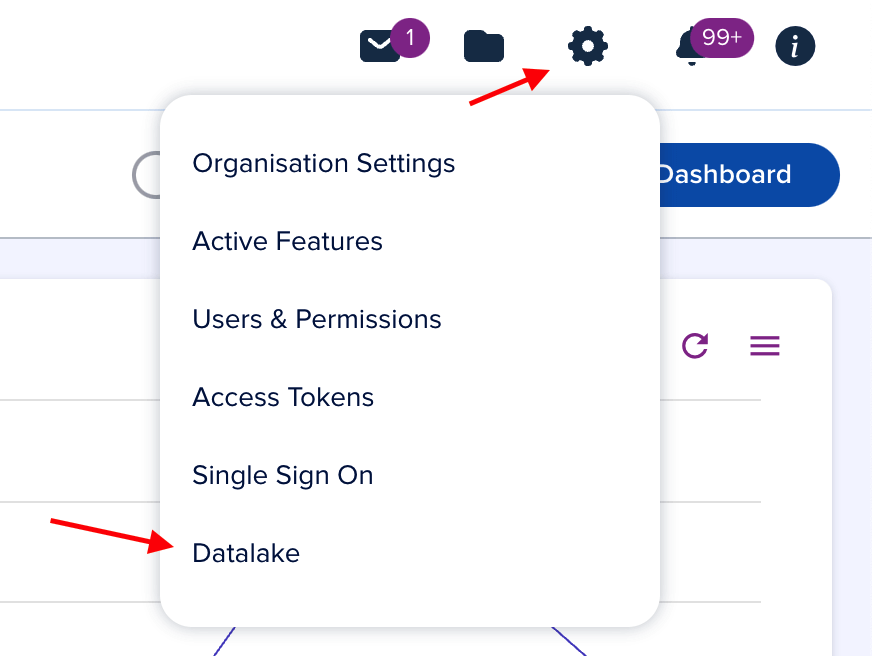

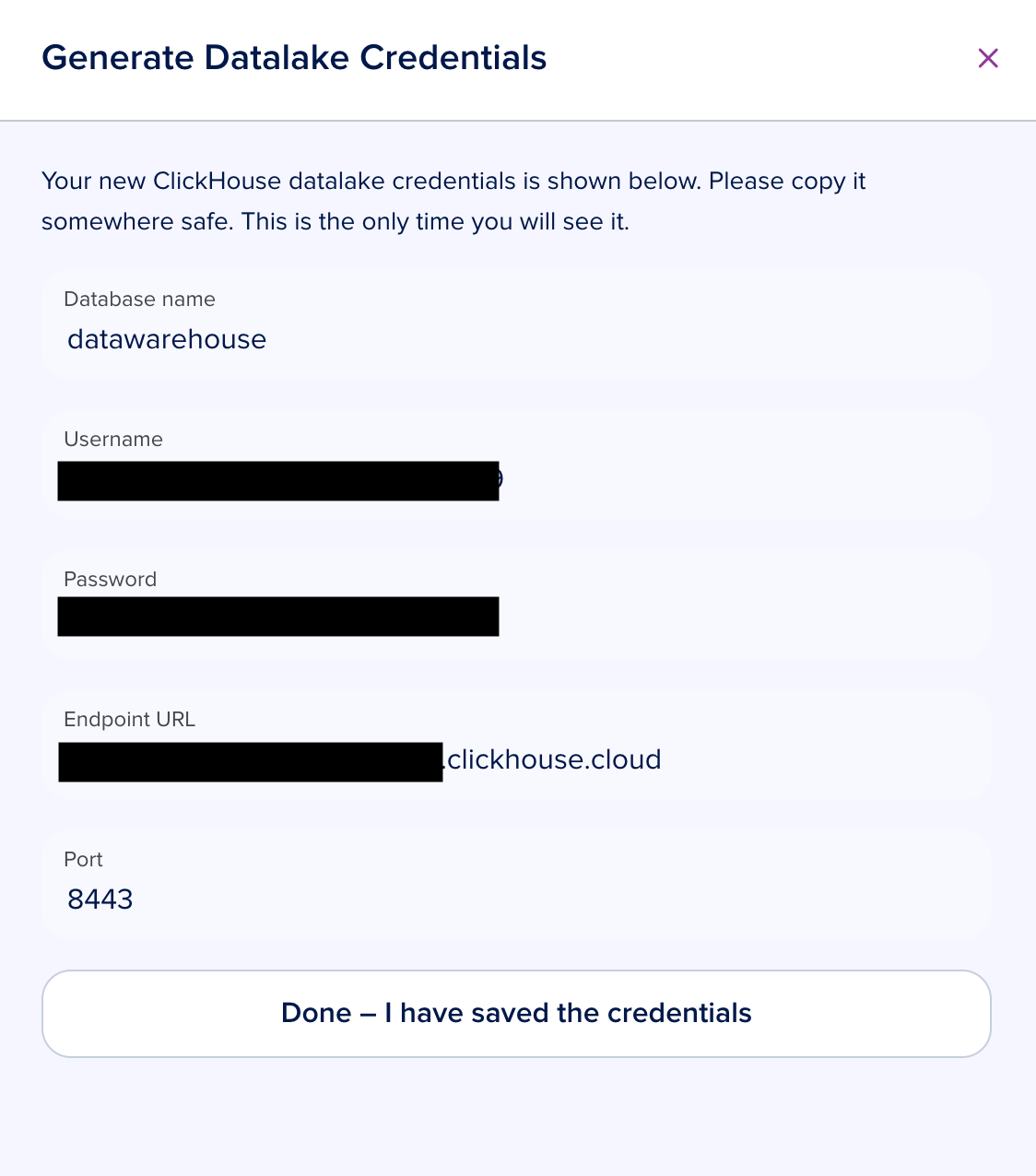

Generate datalake credentials

Navigate to the "Settings" menu on the top right in your organization's portal. Then locate the "Data Lake" section from the drop down menu.

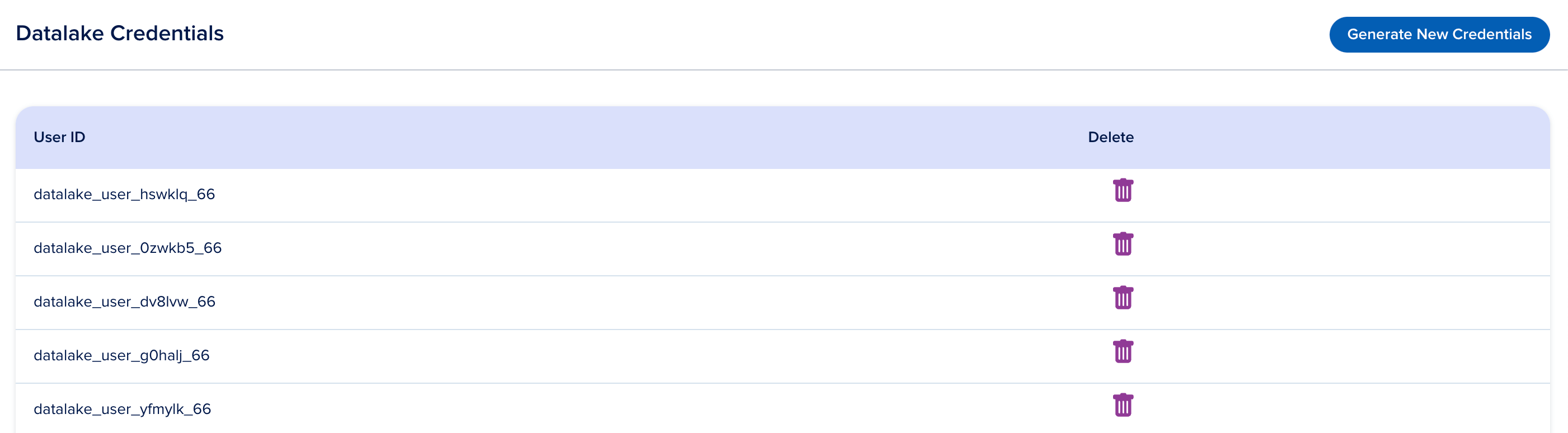

The

Data Lake pagewill display active credentials for your Data Lake. Here, you can revoke existing credentials or create new ones as needed.

To create new credentials, select the

Generate Credentialsbutton.A new set of credentials will be generated. Note that the password will be visible only once at the time of creation for security reasons, so it's crucial to save it securely.

Utilize the generated

username,host,port,databaseandpassworddetails to connect to the Data Lake from any BI tool or other data sources.

Querying the Data

Once you've set up the necessary credentials, you can connect to the Clickhouse data warehouse and query the data. There are two primary ways to interact with the data:

Directly Quering via SQL

You can write SQL queries to retrieve the data directly from Clickhouse using SQL functions. Below are examples illustrating how to query the data for insights.

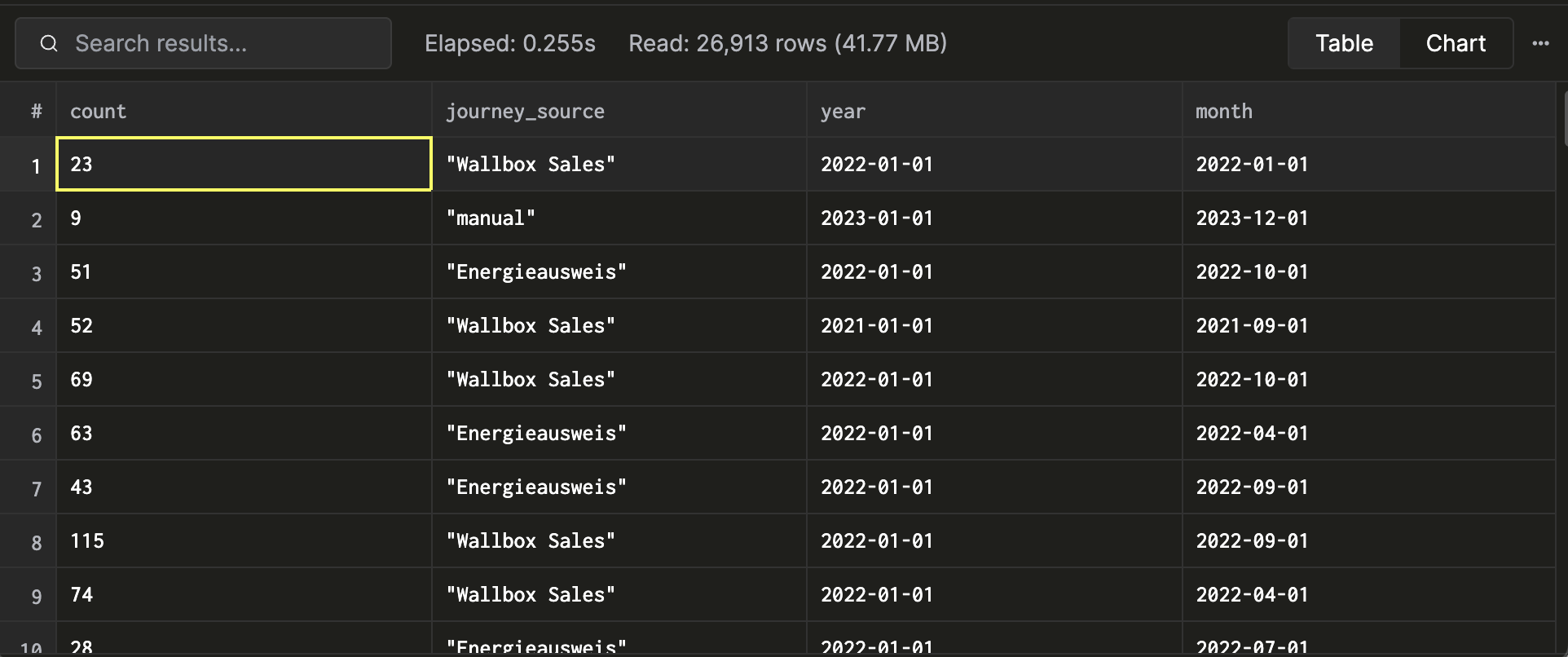

Example 1: Reporting Opportunities Created Over Time Suppose you need to create a report showing opportunities created over time, grouped by journey source, with a time granularity of months. You can use SQL to accomplish this task:

select

count(*) as count,

JSONExtractString(detail, 'payload', 'source', 'title') as journey_source,

toStartOfYear (timestamp) as year,

toStartOfMonth(timestamp) as month

from

entity_operations

where

and schema = 'opportunity'

and operation = 'createEntity'

group by

journey_source,

year,

month;

This SQL query retrieves data about opportunities created over time, extracts relevant information from the JSON payload, and aggregates it by year and month, providing insights into opportunities created during different periods.

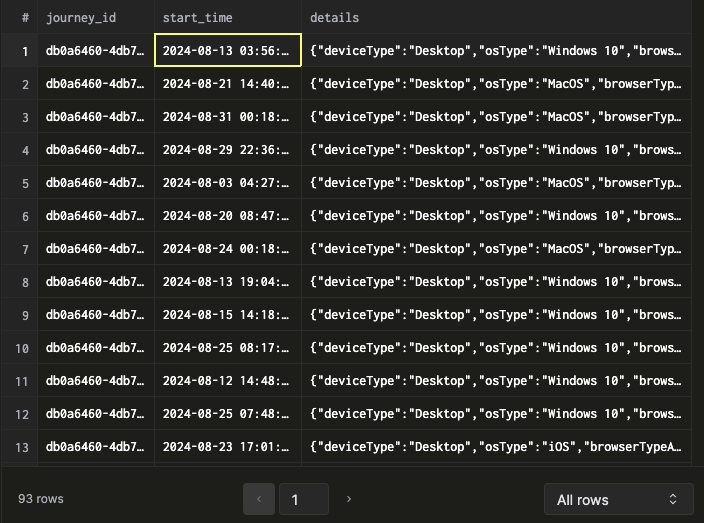

Example 2: Reporting Journey Sessions Created Over Time Suppose you need to create a report showing journey sessions created over time for a specific journey. You can use SQL to accomplish this task:

select

journey_id,

start_time,

details

from

{org_id}_journey_sessions_final

where

journey_id = {your_journey_id}

and

start_time > '2024-08-01 00:00:00'

This SQL query retrieves the journey sessions created over time for a specific journey

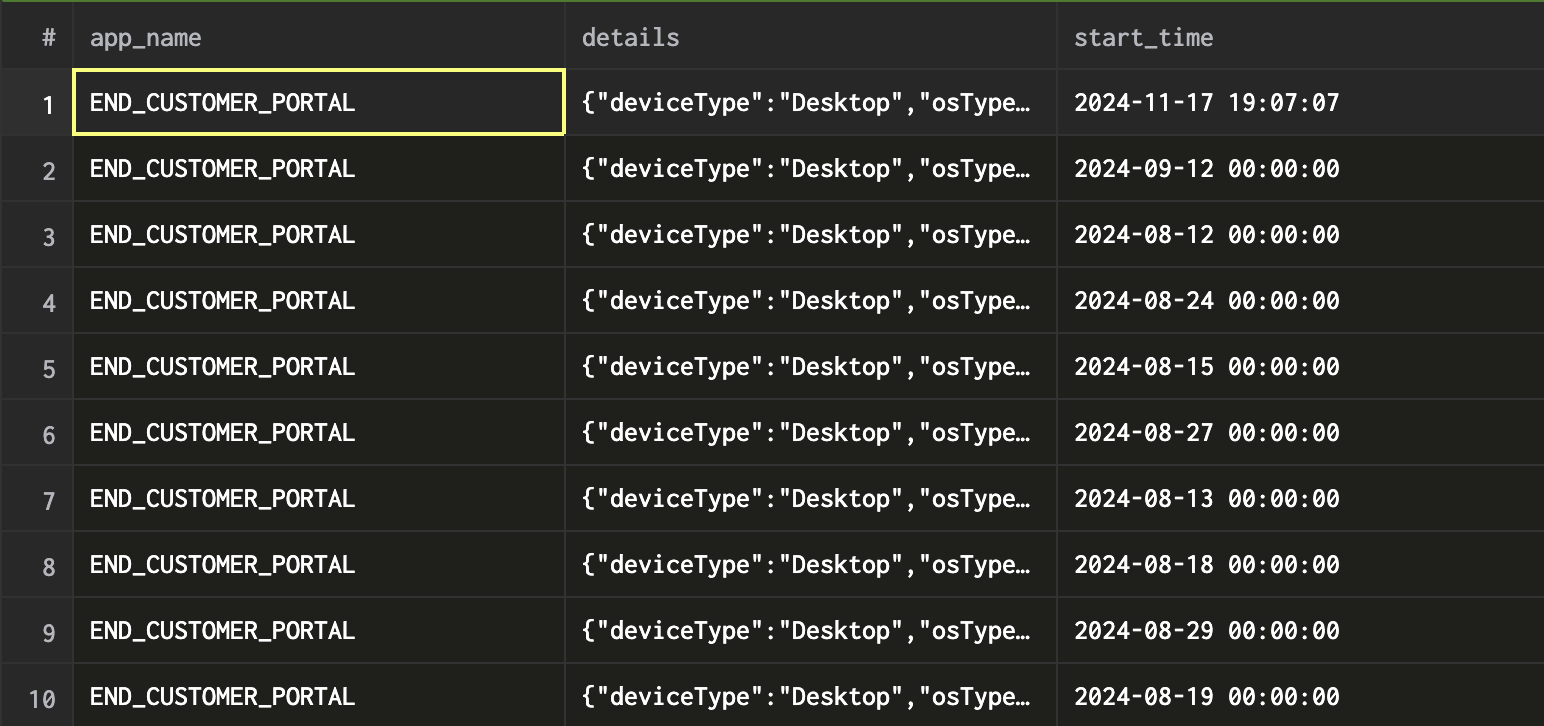

Example 3: Reporting Portal Sessions Created Over Time Suppose you need to create a report showing portal sessions created over time for a specific portal like customer portal or installer portal. You can use SQL to accomplish this task:

select

app_name,

start_time,

details

from

{org_id}_portal_sessions_final

where

app_name = {your_app_name}

and

start_time > '2024-08-01 00:00:00'

This SQL query retrieves the portal sessions created over time for a specific portal

You can use any SQL client to connect to the Clickhouse Data Warehouse (DWH) using the credentials provided. For more detailed information, please refer to this link.

Connecting to BI Tools

Alternatively, you can connect to Business Intelligence (BI) tools of your choice to load the data from Clickhouse and build reports and dashboards. Many BI tools support direct integration with Clickhouse, allowing you to create visually appealing and interactive reports based on your data or alternatively you can make use of official Clickhouse ODBC driver to establish the connection setup with Clickhouse.

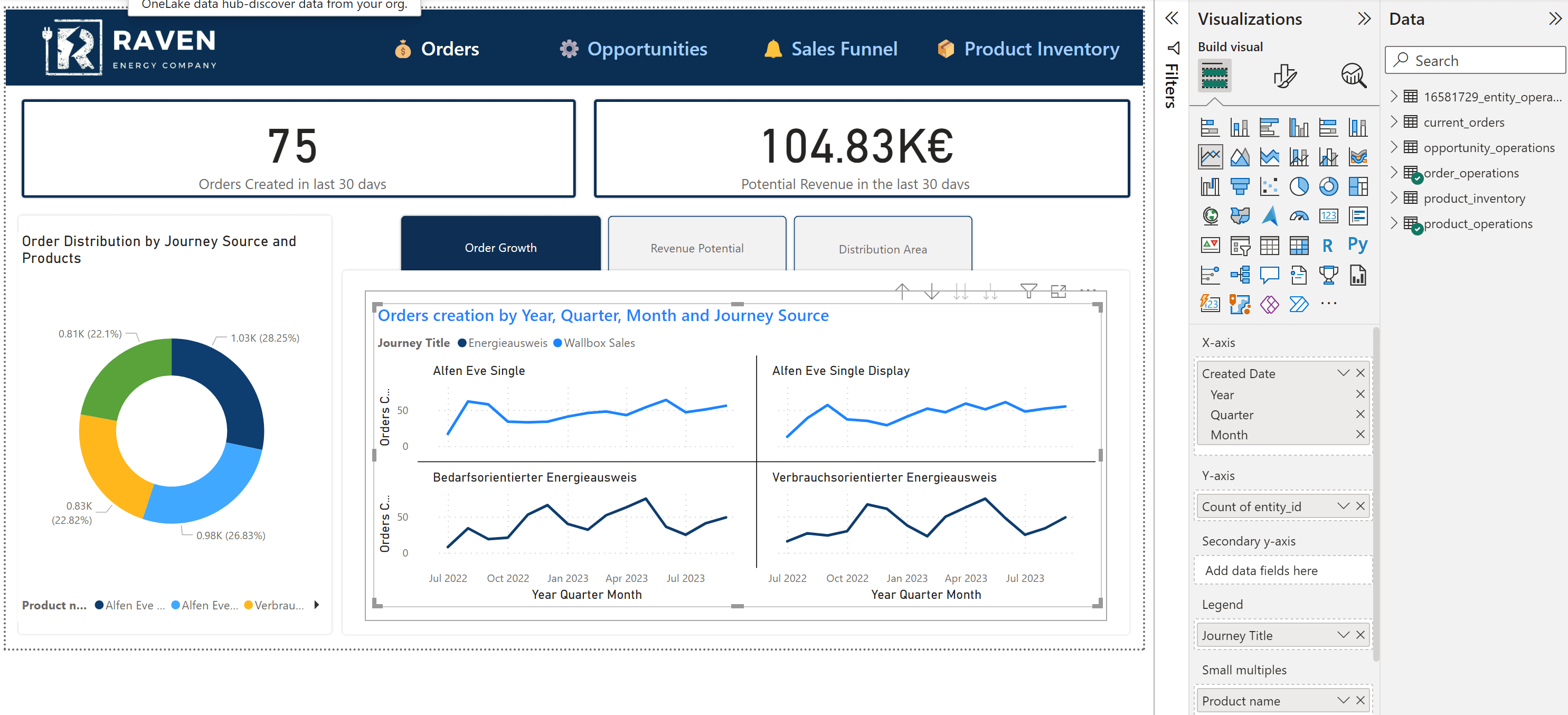

Example - we will walk you through an example of connecting to Power BI to create a demo BI report for

Raven Energy GmbH, an energy utility company with two distinct journeys and workflow processes for handlingWallboxandEnergieausweissales digitally. Each use case involves two products. We will leverage our Data Lake to set up a BI report for this scenario.

To get started with the various ways to establish a connection between Clickhouse and PowerBI, please refer to [this link] (https://clickhouse.com/docs/en/integrations/powerbi).

Steps to Create a Power BI Report:

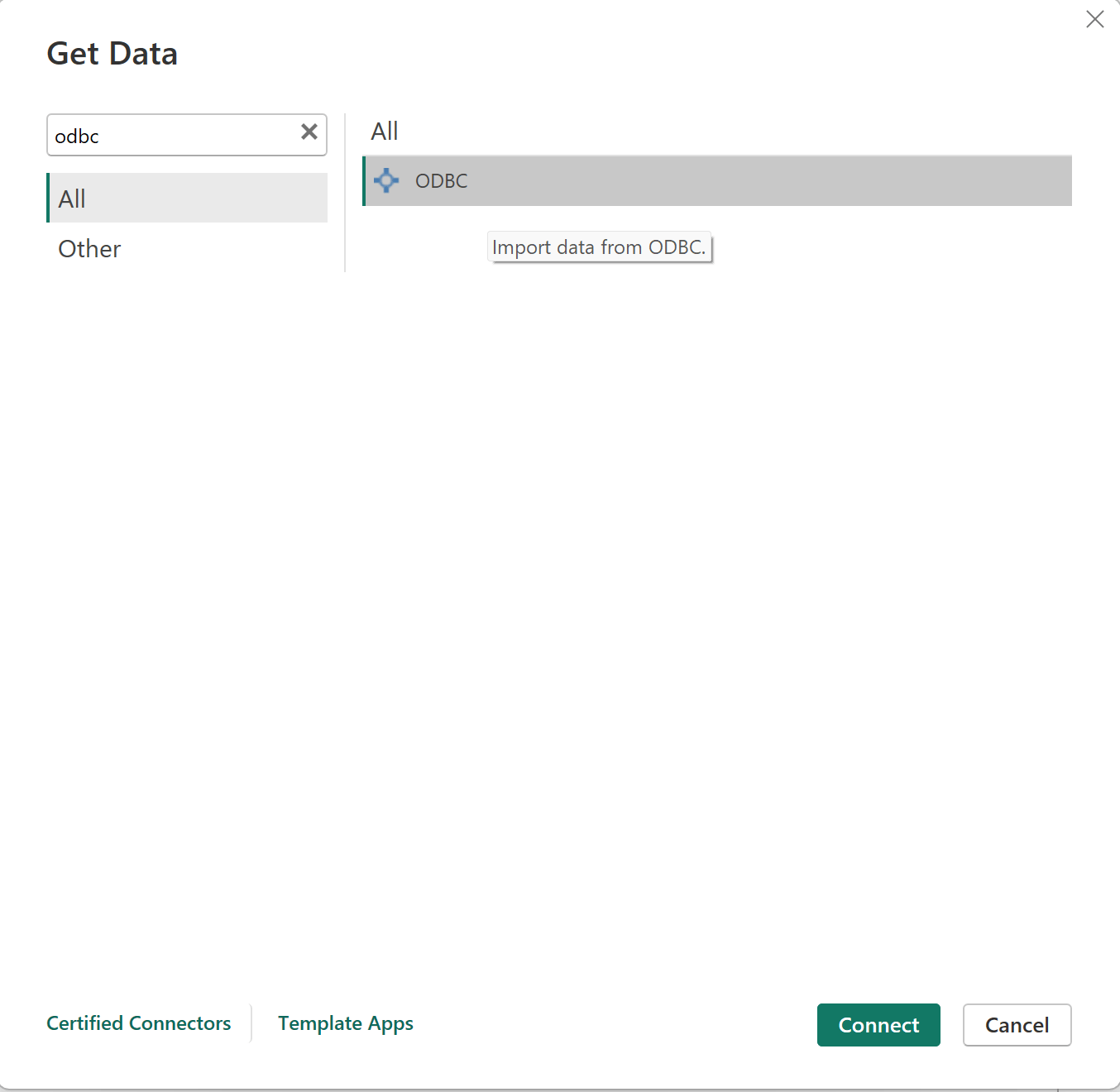

Open Power BI: Once you have installed all the necessary dependencies to set up an ODBC connection as specified in the link above, you can add a new data source by selecting the ODBC option from the list of supported connectors.

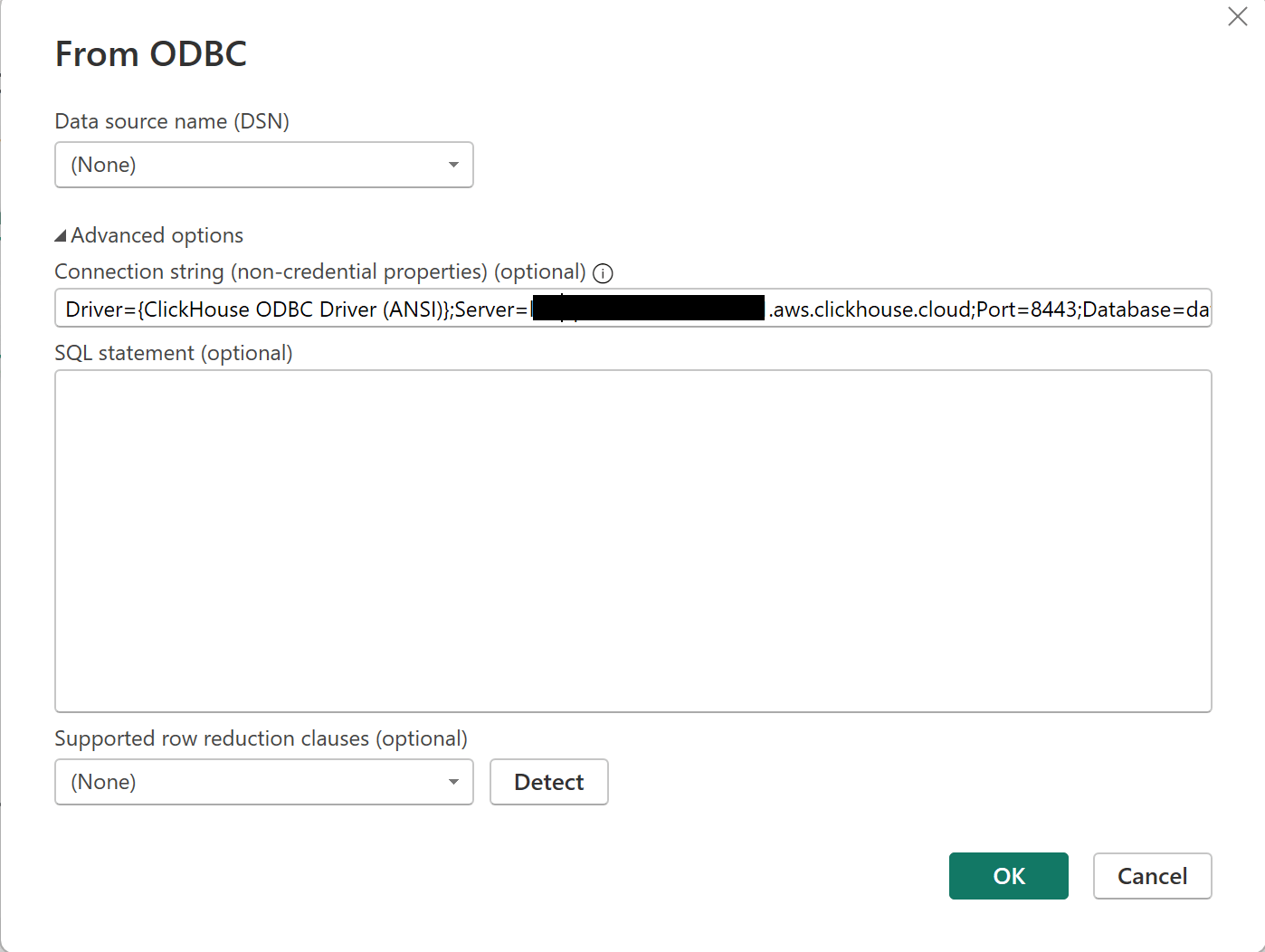

Enter Connection Details: Enter the required connection details, including the ODBC connection, username, password, and the specific database. Then, click "OK" to establish the connection.

Example ODBC Connection String - Driver={ClickHouse ODBC Driver (ANSI)};Server={replace-it-with-host};Port=8443;Database=datawarehouse;

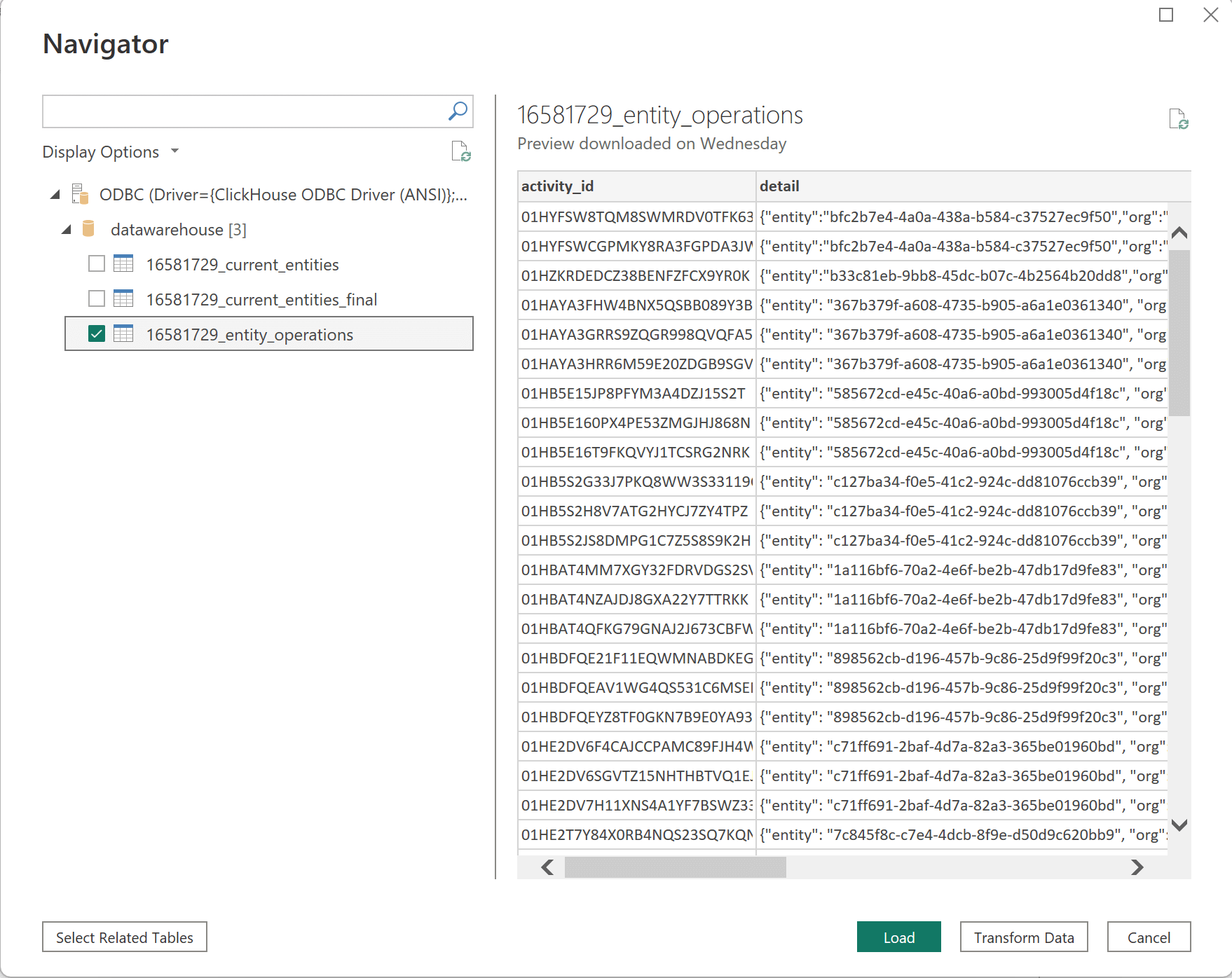

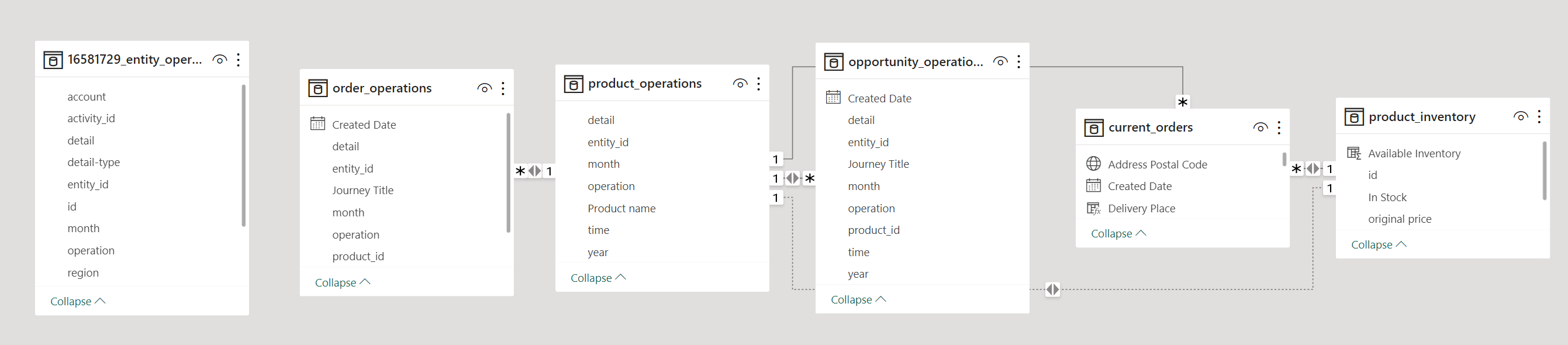

- Select Data: Once connected, Power BI will display the available schemas and tables. You can easily locate the "entity_operations" table within the "datawarehouse" schema, along with the "{org_id}_current_entities_final" simplified view for easier data access.

- Load or Transform Data: You have two options for data handling. You can click "Load Data" to load all the raw data directly into Power BI. Alternatively, you can click "Transform" to access Power BI's query editor, where you can define your custom transformation logic to preprocess and shape the data to your specific requirements.

- Data Modeling: After loading or transforming the data, you can begin setting up data models. Create relationships between different tables to enable seamless data exploration and reporting. For example, you can establish relationships between the entity_operations data and other relevant tables in your dataset.

- Report Creation: With the data modeling in place, you can start creating visually appealing reports within Power BI. Utilize various chart types, tables, and visuals to convey insights effectively.

Interactive PowerBI report using Epilot Datalake

Our Data Lake feature provides a powerful way to capture and analyze real-time entity operations, enabling you to gain valuable insights from your data. By understanding the data schema and following the steps to set up credentials, you can leverage this feature to build reports, perform analytics, and make data-driven decisions for your organization.

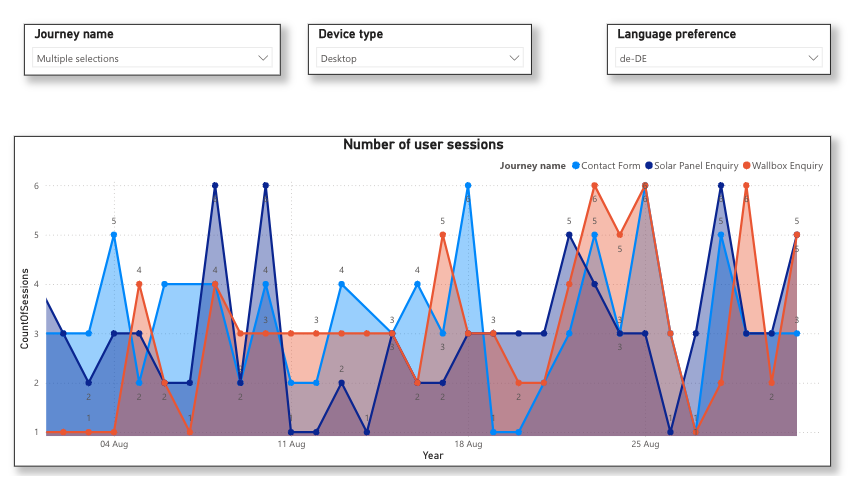

Interactive PowerBI report for Journey Analytics using Epilot Datalake

Our Journey Analytics Data lake feature offers a comprehensive solution to track and analyze user journeys in real-time, providing you with deep insights into how users interact with your journeys. By capturing detailed event data, such as step navigations, form submissions, and user exits, you can gain a clear understanding of user behavior and identify opportunities for optimization.

You can also refer to the following link to establish connection to different BI tools.

If you have any further questions or need assistance with data queries, please reach out to our team.